CyLab researchers shine at PEPR 2021

This year’s conference was likely the largest gathering of privacy engineers ever

Daniel Tkacik

Jun 18, 2021

Source: CyLab

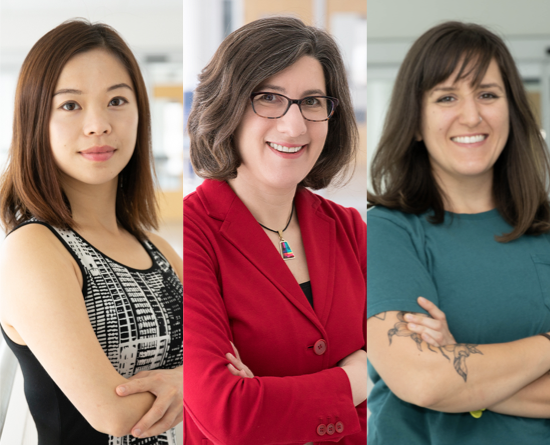

L-R: Yuanyuan Feng, Lorrie Cranor, and Sarah Pearman presented their privacy research at the 2021 PEPR Conference.

Last week, more than 500 people gathered virtually to share and learn about the state of the art in privacy engineering at the third annual Conference on Privacy Engineering Practice and Respect (PEPR), co-hosted by CyLab and the Future of Privacy Forum, likely making the event the largest gathering of privacy engineers ever. Nearly 100 of those attended the conference from outside of the US, and roughly one third of the domestic attendees were from California, representing big tech companies like Google, Facebook, Twitter, and others.

Among other notable statistics: CyLab faculty, staff, and students gave three of the conference’s presentations.

Yuanyuan Feng, a postdoctoral researcher in the Institute for Software Research (ISR), presented a recent study that introduced new guidelines for providing privacy choices.

Thanks to privacy regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), there’s a path towards giving people more control of their privacy. But still, Feng says, privacy choices are hard to find and not easily usable in part because the existing regulations don’t offer much guidance on how to implement privacy requirements. Feng’s team developed a privacy control “design space” to help fill some of the void in guidance.

Lorrie Cranor, director of CyLab and a professor in the departments of Engineering and Public Policy and ISR, talked about how bathrooms are surprisingly useful in conveying concepts related to both privacy and usability. Cranor co-chaired the conference along with CyLab alumna Lea Kissner, head of Privacy Engineering at Twitter.

Cranor shared a thought experiment that she was exposed to a few years ago in which she and others were led to believe that all of the toilets at a conference she was attending were retrofitted into “smart toilets,” analyzing biological waste and tracking individual data. The experience inspired her to create an exercise for her students in which they were to propose a usable notice and consent for smart toilets in public restrooms.

Finally, Sarah Pearman, a Ph.D. student in ISR’s Societal Computing program, presented a case study aimed at improving consent for sharing healthcare data.

In her study, she presented a hypothetical scenario in which a major health insurer is building a chatbot for customers to ask questions about their coverage. The chatbot uses Google Cloud APIs for storage, and because of this, HIPAA requires special consent from the customer. While the study focused on one particular application, Pearman said it also offers broader insights about people’s understandings and misunderstandings of HIPAA, their preferences around healthcare privacy practices, and their likelihood of reading and comprehending disclosures.